How Neuroscientists Are Using Machine Learning

In today’s day and age, fields of inquiry are becoming more and more interdisciplinary. It’s no surprise that this principle extends to neuroscience and data science. In the past few years, the two fields have come to inform one another. Recent advances in neuroscience, including international research into brain mapping and the decoding of brain signals, can be tied to neuroscientists’ increasing use of data science-based methodology to understand their field better. These are how machine learning helps us better understand how the brain works and what this kind of knowledge may lead to in the future.

Mapping the Brain

For the past decade, neuroscientists have taken on the ambitious project of mapping neural connections in the brain. This project is called The Connectome and aims to map the brain to understand the living brain’s functionalities better.

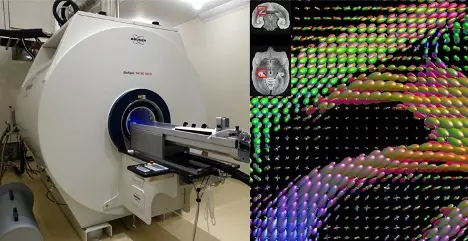

A recent study conducted by researchers at the Onikawa Institute of Science and Technology (OIST) Graduate University using machine learning techniques has led to a breakthrough in the Connectome Project. The method: “Magnetic Resonance Imaging (MRI)-based fiber tracking.” This type of tracking uses the diffusion of water molecules in the brain to map neural connections. The distribution of water molecules creates a trail, which allows neuroscientists to trace connections in the brain.

Previously, researchers tracked nerve cell fibers in animal experiments using marmosets. This method involves injecting a fluorescent tracing into multiple brain locations to create an image where various nerve fibers are located. However, this was a practice that could not ethically be used on humans, as it involved complete dissection of the brain–and hundreds of brain slices, at that.

Neuroscientists speculate that diffusion MRI-based fiber tracking can be used to map the whole human brain and pinpoint the differences between a healthy and diseased brain. This may lead to a better understanding of treating disorders such as Parkinson’s and Alzheimer’s.

Algorithms in Brain Models

In addition to ethical concerns of tracking nerve cell fibers, there were issues of convenience and efficiency. The results of this now outdated method were not wholly accurate and could not necessarily detect neurons that extend to the farthest corners of the brain. So, researchers had to set specific parameters.

Instead of manually setting parameter combinations, researchers can now use machine intelligence to work for them. Using the fluorescent tracer and MRI data from ten different marmoset brains, the OIST researchers were able to test their algorithms against machine-learned algorithms. The researchers found that the machine-generated algorithm had the optimized parameters for their data sets. The researchers were able to generate a more accurate connectome in the marmoset brain.

The reason for this accuracy is that machine learning can recognize patterns hidden in complex data, which is neuroscientists’ primary concern. Even when they can gather data sets, interpreting the results of studies can be difficult, almost impossible, without algorithms such as the ones derived in data science.

Hierarchical Models

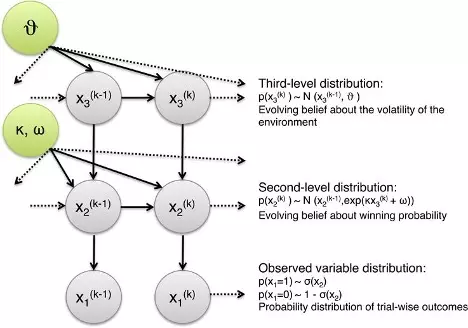

Using the algorithms described above, researchers can construct hierarchical models. A hierarchical model in data science is a model that builds on itself in exponentially increasingly sophisticated layers. Though they belong to data science, hierarchical models apply to neuroscience because they conceptualize the hierarchy of brain cognition. This means that they can be implemented in the study of the human cortex and may be observable through functional Magnetic Resonance Imaging (fMRI).

One type of hierarchical model that researchers have hypothesized to be useful is the Gaussian Filter Model, a non-uniform low pass filter used to reduce noise in imaging. It essentially creates a blur that eliminates unnecessary detail in a scanned image. By reducing this noise, neuroscientists can see the picture and identify only the essential and relevant data.

Decoding Brain Signals with Deep Learning

Neuroscientists can decode brain signals with a technology called Brain-Computer Interface, or BCI. BCI refers to a wired communication pathway between the brain and an external device such as a wheelchair.

Neuroscientists using BCI face three main challenges: calibration, low classification, and inadequate generalization limit. In the past few years, deep learning techniques have been combatting this.

Calibration is a challenge because of the signal-to-noise ratio (SNR) discussed in the Gaussian Filter Model. Often, there is a level of noise that inevitably obscures the signals researchers are testing for. This leads to inaccuracies and loss of important information when there is an attempt to filter out the noise. Noise is any brain signal corrupted by either spontaneous features of the person being studied (blinks, concentration level, etc.) or the environment (temperature, environmental noise, etc.). Researchers can use deep learning to distinguish features from this spontaneous and involuntary noise.

As for poor generalization, a machine is only as useful as the human knowledge behind it. You could decode algorithms all day, but the data is useless if you can’t conclude from the decoded data. On the other hand, deep learning can detect patterns and draw conclusions that would be nigh impossible to do manually.

Though it isn’t perfect, deep learning allows neuroscientists to conclude they likely could not come to otherwise. Deep understanding is the most accurate method researchers currently have for studying brain signals and has been applied to models like an electroencephalogram (EEG), event-related potential (ERP), and fMRI.

Advantages of Deep Learning in BCI Research

To summarize, the three advantages of deep learning in BCI research are the following:

- It is less time-consuming: It works on raw brain signals through back-propagation and does not require additional steps before data is interpretable.

- Captures latent dependencies: It corrects for loss of information or inaccuracy of working with non-static brain signals. Instead, it adjusts and accounts for background noise and is, therefore, able to interpret signs in a changing environment.

- Supports more powerful algorithms: Deep learning algorithms are more powerful than more traditional methods like Linear Discriminant Analysis (LDA) and Support Vector Machine (SVM).

The brain is often referred to as the “final frontier,” and we are only now just beginning to decode its mysteries for a good reason. The brain may be the key to understanding our environment in the most sophisticated way; the key to unlocking that information? Data science and deep learning.